Empowering Discovery,

Enhancing Knowledge

Latest News

Researchers Led By Georgia State Develop Noninvasive Method to Detect Early-Stage Liver Disease

A safer and more sensitive contrast agent for MRI tests developed by a team led by Georgia State University researchers may provide the first effective, noninvasive method for detecting and diagnosing early-stage liver diseases including liver fibrosis.

Technique to More Effectively Diagnose and Treat Cancer Developed by Georgia State Researchers

A method to better trace changes in cancers and treatment of the prostate and lung without the limitations associated with radiation has been developed by Georgia State University researchers.

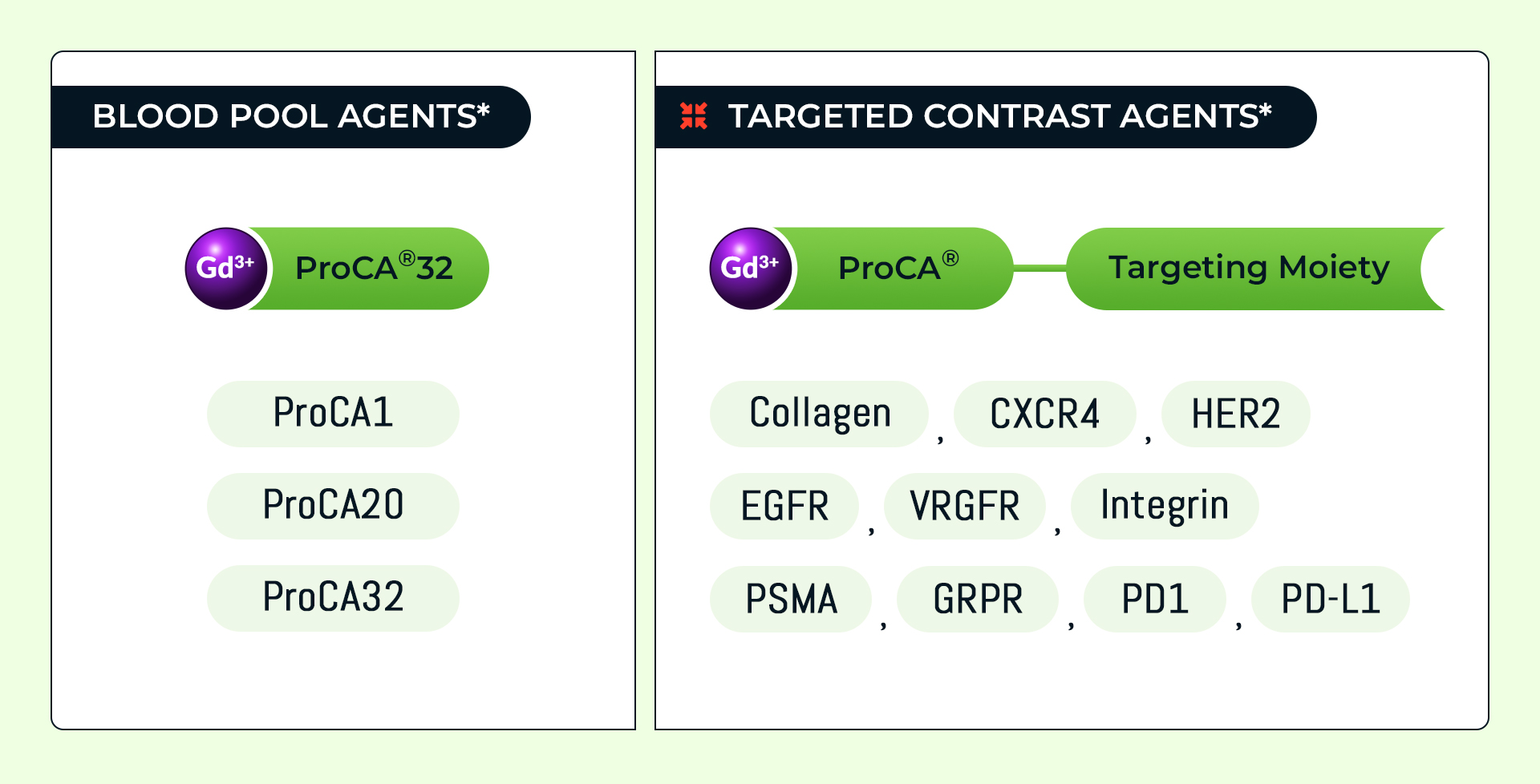

Their findings were published Wednesday, Nov. 17 in Scientific Reports by Nature (click here to access the full text journal article). The researchers developed a new imaging agent they named ProCA1.GRPR, and demonstrated that it leads to strong tumor penetration and is capable of targeting the gastrin-releasing peptide receptor expressed on the surface of diseased cells, including prostate, cervical and lung cancer.

“ProCA1.GRPR has a strong clinical translation for human application and represents a major step forward in the quantitative imaging of disease biomarkers without the use of radiation,” said Jenny Yang, lead author on the paper, Distinguished University Professor and associate director of the Center for Diagnostics and Therapeutics at Georgia State. “This information is valuable for staging disease progression and monitoring treatment effects.”

The researchers’ results are an important advancement for molecular imaging with a unique ability to quantitatively detect expression level and spatial distribution of disease predictors without using radiation.

“Our discovery is of great interest to both chemists and clinicians for disease diagnosis, including noninvasive early detection of human diseases, cancer biology, molecular basis of human diseases and translational research with preclinical and clinical applications,” said Shenghui Xue, co-author on the paper and postdoctoral researcher in Georgia State’s Department of Chemistry.

Improved imaging agents such as ProCA1.GRPR have implications in understanding disease development and treatment.

Protein MRI contrast agent with unprecedented metal selectivity and sensitivity for liver cancer imaging

Led by Georgia State University, researchers have developed the first robust and noninvasive detection of early stage liver cancer and liver metastases, in addition to other liver diseases, such as cirrhosis and liver fibrosis. Their findings were published in Proceedings of the National Academy of Sciences on May 26th, 2015 (click here to access the full text journal article).

More than 700,000 people are diagnosed with liver cancer each year. It is the leading cause of cancer deaths worldwide, accounting for more than 600,000 deaths annually, according to the American Cancer Society. The rate of liver cancer in the U.S. has sharply increased because of several factors, including chronic alcohol abuse, obesity and insulin resistance.

ProCA32, the newly developed contrast agent by Shenghui Xue and Jenny J. Yang et al,, allows for imaging liver tumors that measure less than 0.25 millimeters. The agent is more than 40 times more sensitive than today’s commonly used and clinically approved agents used to detect tumors in the liver.

ProCA32 widens the MRI detection window and is found to be essential for obtaining high-resolution quality images of the liver. This application has important medical implications for imaging various liver diseases, the origin of cancer metastasis, monitoring cancer treatment and guiding therapeutic interventions, such as drug delivery.

InLighta Patents

Academic Papers and Presentations by Dr. Jenny Yang

Explore Dr. Jenny Yang’s related academic papers, conference presentations, and more.